5G and Open RAN

Fifth-generation (5G) network technology is now the major focus of the entire global mobile industry, as subscribers in locales throughout the world have become dependent on uninterrupted access to high speed Internet supporting mobile applications and services wherever they go.

Dramatic changes in the technology, the architecture of networks, and available radio spectrum have all paved the way for the implementation of a new 5G mobile network. As the network evolves to become software-centric and embraces a cloud-based deployment model, an opportunity exists to create an open, standardized architecture across networks, leveraging open software, that enables open multivendor interoperability.

This Open RAN concept promises to change the economics, operations, and deployment models for future mobile networks.

Evolution of Mobile Standards

A continuous progression of global standards for digital mobile technology has occurred since the initial deployment of the technology in the early 1990’s. The advent of the Global System for Mobile communications (GSM) in the early 1990’s extended the capabilities of the previous analog mobile phone service (“AMPS”) systems with digitally encoded voice calling, two-way messaging, and a globally-interconnected network supporting roaming between operators – this was referred to as “second generation” or “2G” mobile technology.

The third generation (3G) mobile technology succeeding GSM in global networks was based on Universal Mobile Telephone Service (UMTS) standards. This system introduced support for new higher frequency bands with additional capacity, and applied new modulation techniques that efficiently used spectrum. In combination with improved technology in terminals due to semiconductor miniaturization, this system became the platform for the first widely deployed mobile data services, enabling email, basic media services and (in cases) web access.

By the time 3G technology was mature and fully deployed, the widespread adoption of high speed internet access technology in wireline networks made Web-based services and applications prevalent in the lives of most customers. Although some technologies like High Speed Downstream Packet Access (HSDPA) were introduced to augment the data services of 3G networks, a solution to provide genuine high speed mobile data service was needed.

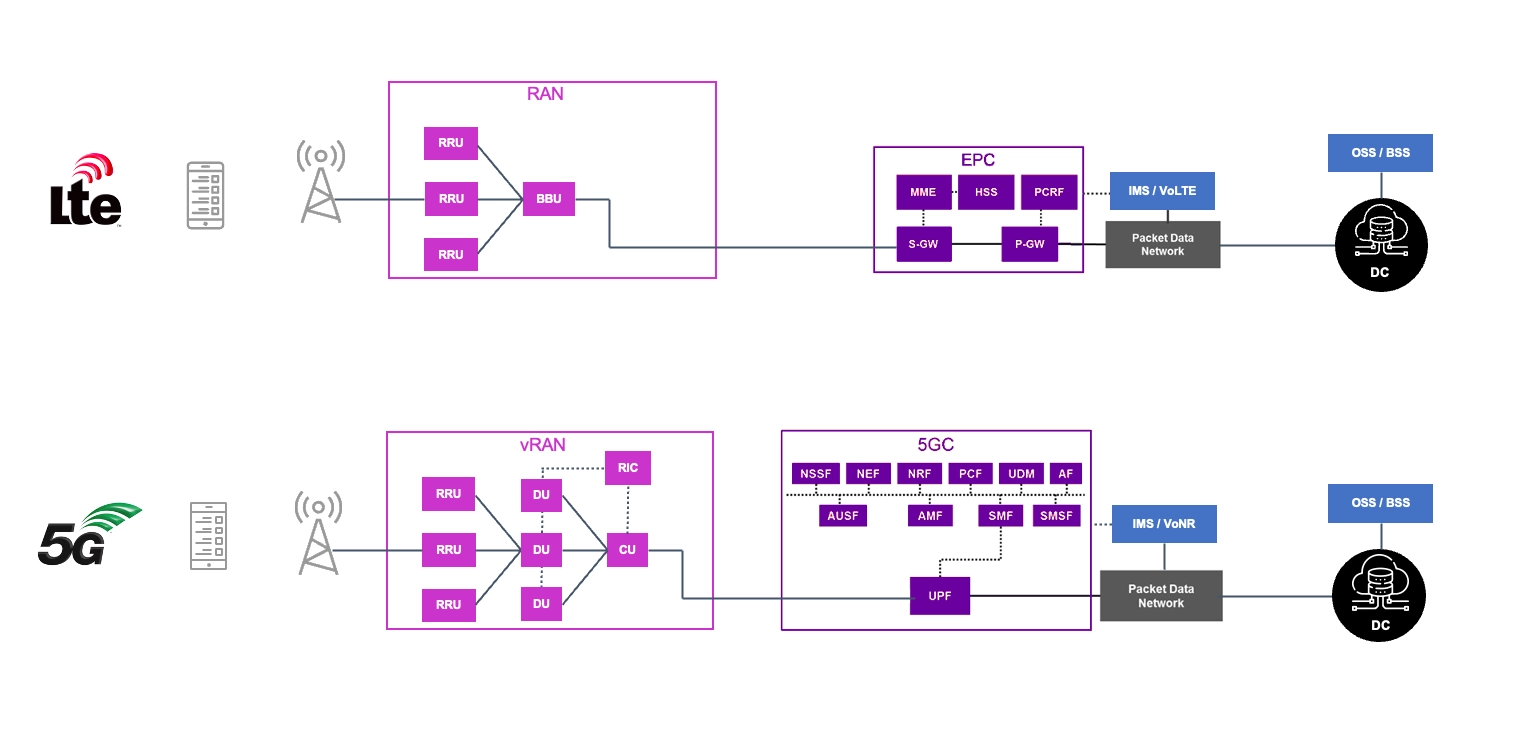

The Long Term Evolution (LTE) standards became the successful and dominant fourth-generation (4G) network technology which embraced packet data first in the design of the mobile network, and therefore it was the means of realizing the first true mobile high speed Internet access capability. This was supported by an all-packet based core data network called the Evolved Packet Core (EPC). Elimination of the circuit switched paradigm in LTE also paved the way for high fidelity (wideband) voice codecs, voice over IP technology (called VoLTE) and rich media communications services supported by the IP Multimedia Subsystem (IMS).

Although LTE was able to capably support real-time interactive communication services, user expectations were steadily recalibrated to new experiences and applications like high-definition streaming media, immersive gaming, video conferencing, web collaboration and the Internet-of-Things (IoT). These new cloud-centric services present new requirements for ubiquitous network performance, capacity, and low latency operation when applied to a mobile network, and thus, are among the many sources of demand for a new fifth-generation (5G) mobile network technology.

5G Network Concepts

From its inception, the project to develop 5G standards had three main focus areas and objectives in providing enhanced mobile network capabilities.

- Enhanced Mobile Broadband (eMBB)

- Ultra-Reliable Low-Latency Communications (URLLC)

- Massive Machine-Type Communications (mMTC)

Enhanced Mobile Broadband (eMBB)

Enhanced Mobile Broadband (eMBB) is the first capability that was brought to market, focused on higher throughput, and network capacity; it is the realization of multigigabit performance over-the-air for individual users, and a high density of such users in a service area. The key enablers to accomplish the objective of eMBB are the addition of spectrum, including new millimeter wave (mmWave) bands, and the densification of base stations, distributing the total user load among more radio sites, including lower-power small cells.

Ultra-Reliable Low-Latency Communications (URLLC)

The Ultra-Reliable Low-Latency Communications (URLLC) objective of 5G serves applications with mission critical, safety oriented, and real time control requirements where the performance of the network must be deterministic, optimized for performance and eliminating packet / data loss while maintaining minimal transit latency. As so many application architectures are expected to transition to a model where the computation and datacenter resources are not collocated with the operational environment, point of use, or controlled device, it is necessary to provide persistent, high performance, high reliability connectivity to make this distinction invisible.

Massive Machine-Type Communications (mMTC)

Massive Machine-Type Communications (mMTC) applications in 5G seek to optimize for architectures where the predominant terminal or client device is not a subscriber, but is instead an intelligent device that depends on robust network access for its mission. Key factors for this objective are dramatically expanding the total supported population of active / connected devices on the network, and minimizing the overhead of communications to increase the overall network efficiency.

5G Standardization

The Third Generation Partnership Project (3GPP) has been and remains the main global standards venue for mobile radio and network technology, including for 4G, and now also 5G. Standards work for 5G began with Release 15, a wave of specifications that were initially delivered in 2017.

This initial release provided the foundation of the 5G New Radio (5G NR) air interface which included a range of additional bands used globally that expanded the “Sub-7” (below 7GHz) frequencies, also referred to as Frequency Range 1 (FR1). The advent of 5G NR also introduced so-called “millimeter wave” (mmWave) frequencies in the microwave range – Frequency Range 2 (FR2) – that were not previously used in terrestrial mobile communications. The dramatic increase in spectrum available for the air interface is largely what makes it possible to realize multigigabit speeds to individual devices in 5G, offering some level of parity to optical broadband technology from the fixed network domain.

Release 15 specifications also set forth a new packet core for 5G, called the “5G Core” (5GC). This created the option of deploying 5G New Radio-enabled base stations (called “gNB”s) that could connect to existing Evolved Packet Core data networks in a “Non-Stand Alone” (NSA) mode for backward compatibility or connect to the 5G Core of a 5G-only network in “Stand Alone” (SA) mode.

The 5G standards are notable for replacing many of the mobile-specific signaling and control protocols of past implementations with web-centric alternatives that are more closely aligned with the technology used for cloud native applications (HTTPS, REST, etc.). This design strategy also facilitated a formalized separation of control plane and user / data plane entities in the 5GC, an approach referred to as “Control and User Plane Separation” (CUPS). Combined with software-centric implementations of the subsystems in the packet core, the CUPS approach allowed for greater scalability, balancing of hardware resources, and distribution / localization of individual instances to specific areas of the network optimizing for latency and transport utilization.

Release 16 specifications for 5G introduced support for Dynamic Spectrum Sharing (DSS), which enabled the simultaneous deployment of the 5G Radio Access Technology (RAT) with heritage 4G systems in the same frequency bands. This enabled the deployment of 5G in environments where new 5G-specific spectrum bands are not available, or not ready to implement. Offering a further benefit to spectrum-constrained operators, the addition of the 5G New Radio Unlicensed (NR-U) capability provided for the use of unlicensed spectrum bands (typically shared with Wi-Fi) under the control of the 5G network.

The other key capability of Release 16 was the addition of support for Network Slicing. This concept enables the creation of end-to-end partitions of network resources (including in shared infrastructure); this in turn allows for individual “slices” to be allocated to specific classes of users, specific applications, or even individual customers (in the case of Private 5G).

More recently, the Release 17 specifications added a unique new capability for access using Non Terrestrial Networks (NTN). Although specialized satellite uplink or backhaul systems have been used in conjunction with terrestrial mobile networks for some time, this is the first time reuse of the radio systems, bands, and procedures from the terrestrial network has been applied to provide direct access to terminals from satellite-based gNB stations as an integrated system.

As of Release 17, support has also been added for a large segment of spectrum for FR2, allowing the use of nearly 20GHz of total capacity in the region of 60GHz.

RAN Architecture Progression

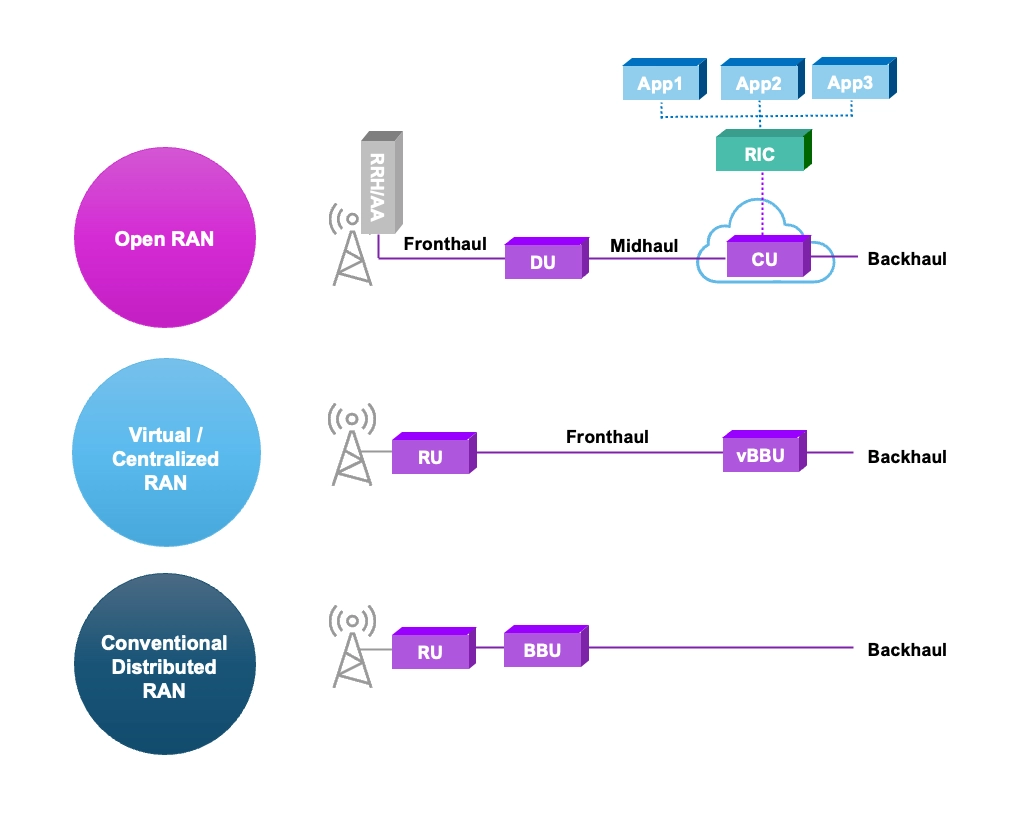

The Radio Access Network (RAN) is a key element of the 5G architecture, and a large part of the technical focus of standards. A number of different architectures have emerged over time for the placement and interconnection of RAN components.

The original model for mobile systems in macro-cellular networks was a fully Distributed Radio Access Network (or, “D-RAN”) architecture, where radio equipment, digital / baseband processing, and network switching, transport or routing elements were all present together at a “self contained” base station site. This approach minimized the reliance on any other network elements or infrastructure for the operation of the site, but required a significant minimum baseline of power, space, facilities and ancillary equipment to create a base station or “Node B”.

With the establishment of standards such as the Common Public Radio Interface (CPRI), it became possible to separate radio and antenna components from the other elements performing the digital / baseband layer functions of the RAN using a “fronthaul” optical or packet-based transport connection, therein minimizing the amount of equipment that needed to be present at a base station site. This was especially useful as the network continued to expand and densify, giving rise to situations where a radio site was space constrained, or when coverage requirements necessitated the installation of radio units at a location where a traditional base station deployment was not possible or not desirable. In these cases, the digital or baseband units (BBU) and any additional elements needed to provide further networking connectivity to the packet core could be relocated to a centralized hub site, in what is now known as a Centralized Radio Access Network (or “C-RAN”) architecture.

The next step in this evolution was the Virtualized Radio Access Network (or “V-RAN”) architecture. In this approach, virtualized or containerized network functions are implemented in software, using general-purpose computing platforms to replace specialized hardware implementations of digital and baseband functions. Adaptation of the radio unit (RU) interface to use a packet-based format such as Enhanced CPRI (eCPRI) enables both the radio-facing and core-facing (or “Backhaul”) interfaces of the Virtual Baseband Unit (vBBU) to use common Ethernet-based technology for all of the network connections, aligning with the common standard of carrier datacenters. This Network Function Virtualization (or “NFV”) approach allows RAN function workloads to be deployed and managed along with a pool of other similar workloads in the carrier datacenter using a common framework and compute resources.

Open RAN

Open RAN standards established by the O-RAN ALLIANCE seek to extend the vRAN model by disaggregating BBU and RAN components into a fully distributed architecture, while defining standard interfaces between the subsystems, allowing them to be interchangeable and interoperable.

The current O-RAN architecture provides the functional separation of RAN components into a Radio Unit (RU), Distributed Unit (DU), Centralized Unit (CU), and Real-Time and non-Real-Time Radio Intelligent Controller (rtRIC and nrtRIC). The architecture also includes a System Management and Orchestration (SMO) Component which coordinates the deployment, management, and telemetry of each of the distributed software network function components, including interactions with the O-Cloud infrastructure that hosts the network functions. Each of these elements requires a network interconnection to function, and a packet switching or transport function should the adjacent component be located at a remote site.

In this architecture, the Radio Unit (RU) is the entity responsible for digitizing the radio layer data for the controlling upstream Distributed Unit (DU). The functionality present in the RU will typically include a number of instances of transceivers, power amplifiers, and digitization functions for each of the spatial streams in a Multiple Input / Multiple Output (MIMO) configuration, antenna control and uplink packet processing for the connection to the DU.

The Distributed Unit (DU) is the element that provides link layer (MAC) control functions for the Radio Units (RU) to which it is attached, as well as a portion of the Physical (PHY) layer signal processing functions related to encoding, scrambling, modulation, equalization, MIMO layer mapping and beamforming for the radio-destined traffic.

Traffic from one or more DUs is aggregated by the Centralized Unit (CU), which performs user session management, load distribution, scheduling and handover functions; these are less signal and processing intensive, but generally would entail a greater total volume of packet data.

The Radio Intelligent Controller (RIC) is a specialized, discrete element in the O-RAN architecture that performs service and policy management, RAN analytics, and Artificial Intelligence (AI) / Machine Learning (ML) functions to provide automated, intelligent management and optimization of radio resources and operation in complex networks. These functions work either on a long (1s+) or “instantaneous” (<<1s) timescale in the case of the non-Real-Time and Real-Time RIC subsystems respectively. A modular framework has also been developed to facilitate the extension of RIC logic and functionality through open, standardized interfaces using rApps ( for nrtRIC) and xApps ( for rtRIC).